Unity XR Hands Setup: Platform Agnostic Hand Tracking Features Are Here !

Unity quietly released a new XR package which allows you to use access hand tracking information from devices that support hand tracking, but does this work out of the box with any VR/AR devices available today? Well the answer is no, however this is a HUGE step towards providing agnostic hand tracking features because now any VR/AR headset manufacture could adhere to Unity’s specification which allows plugins to work with Unity XR Toolkit and XR Hands packages as described in how to implement a provider.

What is available in Unity XR Hands pre-release package ?

Currently Unity provides one new package com.unity.xr.hands and a sample project which includes a Hand Visualizer component, this component allows you to bind left and right hands prefabs (also included in this package) which builds a hand mesh in real-time, keeps track of hand joints, tracks hands linear or angular velocities and allows you to display debugging info such as with hand joints and hand velocity.

How can you get Unity XR Hands setup ?

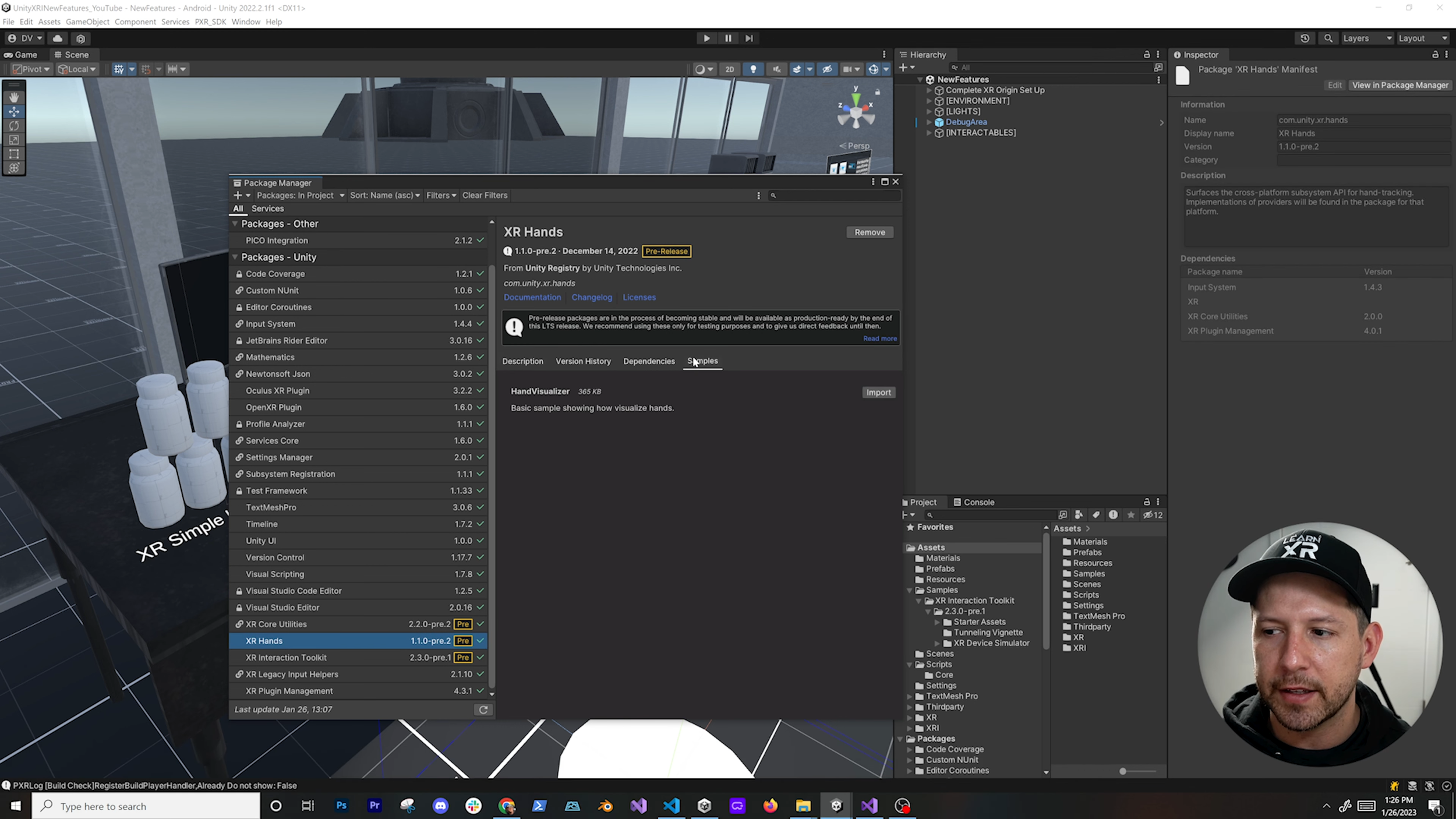

Fig 1.0 - Unity XR Hand Visualizer (Script)

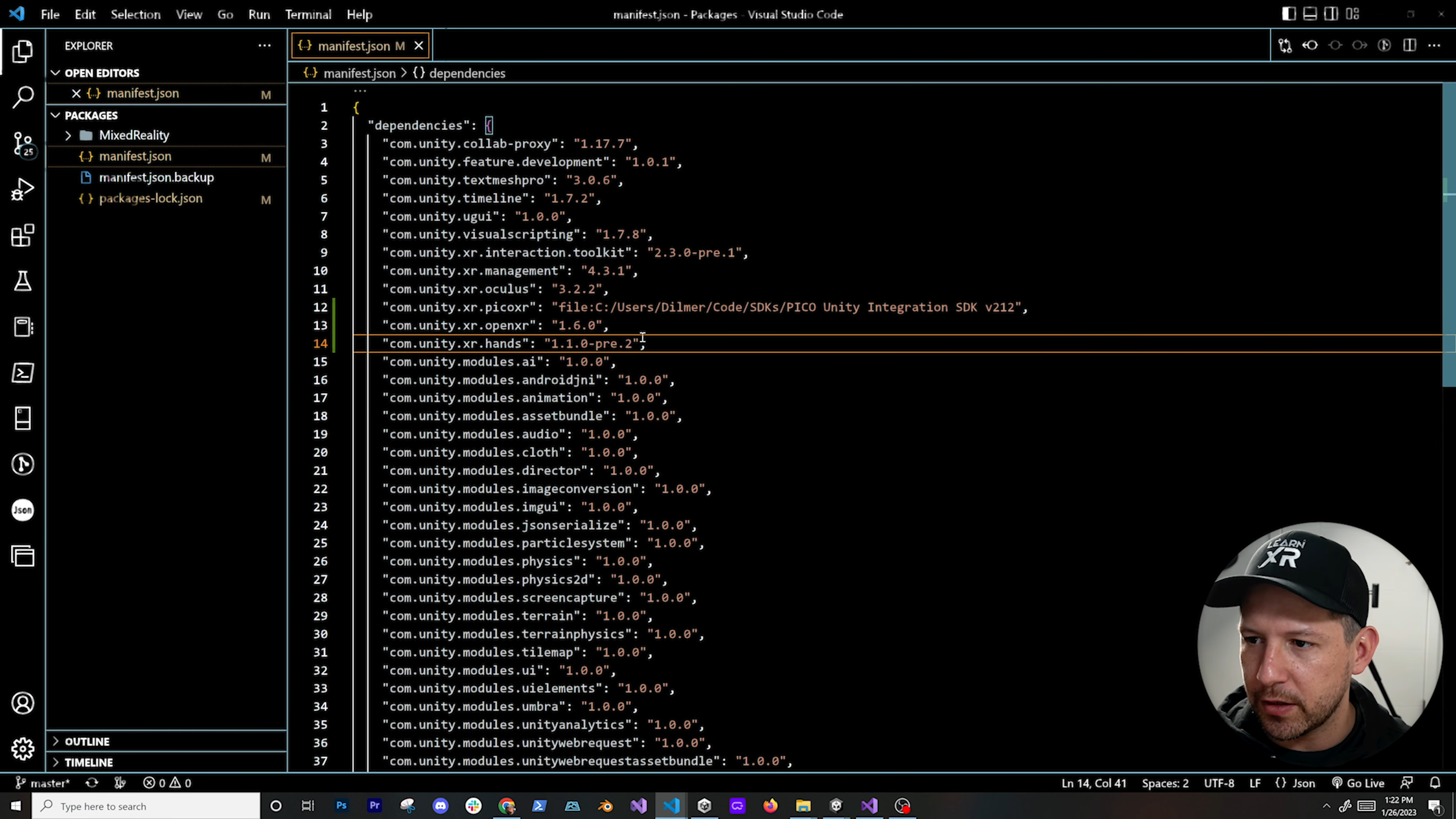

You could open up your package manager in Unity and search for com.unity.xr.hands but I personally find it a lot easier to open up my project manifest.json and simply add the lines required to get packages setup which in this case you can add these two lines "com.unity.xr.openxr": "1.6.0" and "com.unity.xr.hands": "1.1.0-pre.2". Also notice we are also using a specific version of OpenXR because version 1.0.6 has been updated to work with XR Hands, any earlier versions won’t work so make sure that you use 1.0.6 or greater.

To import the samples, simply go back to Unity after updating your manifest.json and wait for both new packages to import and load, once the process is completed go to Window > Package Manager > XR Hands and find the samples tab to import it.

Add a XR Origin component by right clicking on the Unity Hierarchy > XR > XR Origin

Create a new game object named “Hand Visualizer” under the XR Origin > Camera Offset then add the “Hand Visualizer” component under it (see Fig 1.0)

Both LeftHand and RightHand meshes can be populated by using existing models part of the samples imported on step 2. Joint and Velocity prefabs are also available within the same package.

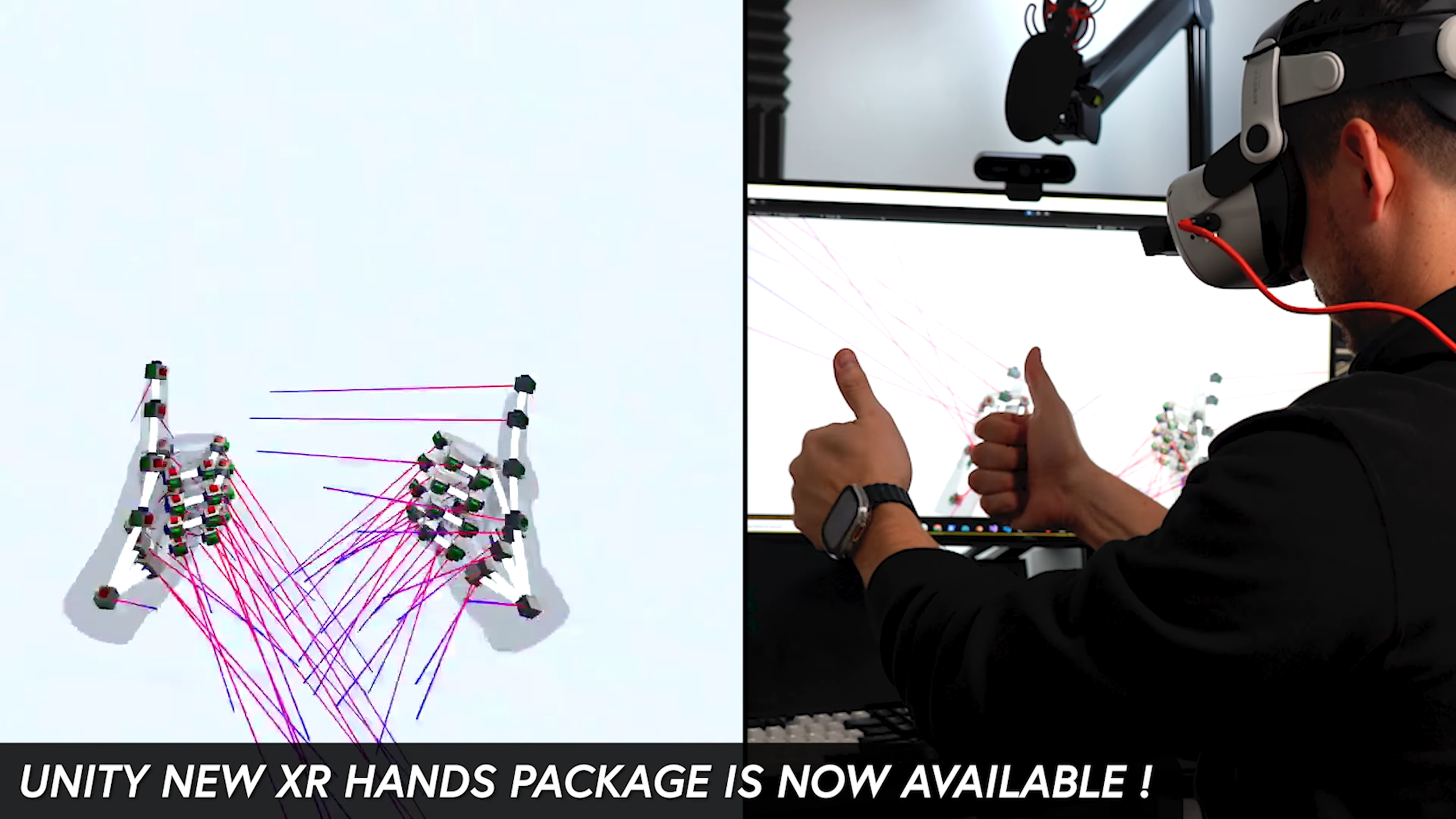

Few reference images that are helpful to denote new few features available with Unity XR Hands

Can you test Unity XR Hands With Oculus Link ?

Yes and that’s exactly what I ended up doing to test this package, just be sure to have a PC and connect your Meta headset via USB-C to your computer then have the Oculus App available before hitting play in your Unity editor. All the steps would be beyond the goal of this article but if you’re curious about getting this to work be sure to follow the steps on this video.

Well that’s everything for this article and if you found it helpful or have additional questions please let me know below thank you !