My Experience With Cognitive3D VR/AR Analytics And SDK Integration For Unity!

Today, I'd love to share some insights about my experience with Cognitive3D VR/AR tools after testing their Unity SDK and dashboards for the last few weeks. But first, I'd like to get you somewhat familiar with the basics by explaining what Cognitive3D is, and lastly, I can share my honest opinion of why I believe it differs a lot from other analytic tools.

What is Cognitive3D?

Cognitive3D provides a robust set of analytic tools designed for VR & AR experiences, which tracks a lot of information from your users' sessions by acquiring data from HMDs (Head Mounted Displays), controller(s), and a variety of hardware stats such as battery, processor, cameras, etc. There are many features within Cognitive3D, but to keep this straight to the point, I will mention the most important ones below:

Fig 1.0 - Scene Explorer w/ VR Training Scene

Scene Explorer - allows you to replay your user(s) sessions. Basically, as you hit Play in Unity, your user session is recorded. Depending on the level of integration, you will see a certain amount of data. At the minimum integration level, you can see your users' movements (HMD & Controller(s)), as well as what areas they're focusing on (their Gaze) and what object(s) they're interacting with. (See Fig 1.0)

Scene Viewer - provides you with a quick view of the 3D environment you uploaded to Cognitive3D. This is very important because it provides you with an aggregate view of the areas users are focusing on the most. This is also based on date ranges you specified in the portal.

Dynamic Objects - honestly one of my favorite features, can be configured in Unity by just adding a collider and a “Dynamic Object” script, which is part of the Cognitive3D SDK. Then objects configured with this will gain precise gaze information to let you know exactly what areas users are looking at the most. The visualization on these objects generates heatmaps and cube aggregation. (See Fig 1.1)

Objectives and Exit Polls - I personally didn’t use any of these features, mainly because I ran out of time, but the idea of Objectives and how they work is amazing. Basically, with Objectives, you could set rules within the portal based on what was captured during a session. For instance, if a user must look at 3 specific objects during the session, you could then set those rules and keep track of the number of users who are achieving the objectives. In addition, you could create custom events and include them as logic within your objectives definitions. (I show you how custom events work in more detail in this video). Exit Polls, by its name, can be self-explanatory. This is something I didn’t test personally, but it is a great way to capture user feedback right from within your VR/AR experiences and when they are about to end their session(s).

Fig 1.1 - Object Explorer showing Jacket Dynamic Object results

What’s the process of Integrating Cognitive3D into Unity?

This was too easy. It was harder for me to make a video than it was to integrate it into Unity. Why? Well, because like any other analytic tool, it takes time for session recorded data to get processed and be ready for me to review it.

Ok to integrate Cognitive3D is super easy, let me walk you through a few steps:

Fig 1.2 - Cognitive3D Project Creation

Create a new Cognitive3D Project by using their portal. If you don’t have an account, simply create a FREE account here and you should be able to do everything I walk you through below using a free account. (See Fig 1.2)

Copy the Developer Key shown on the next page and paste it into the previous step. You will need to click on “Generate Key” to generate one.

Use an existing Unity project or create a new one to follow along. In my case, I used Unity 2022 LTS. I'm sure it works with older versions, but I recommend branching out if you are using GIT to an R&D branch during your integration or creating an entirely new project to test with.

In Unity, go to Window > Package Manager > Add package from git URL, then enter https://github.com/CognitiveVR/cvr-sdk-unity.git (this will download the Cognitive3D SDK and install it in your project).

Now you should see a “Welcome” popup from Cognitive3D. Go through the steps and enter your Developer Key.

Next, you will see a few options: one to do a Quick Setup and the other option is Advance Setup.

The Quick Setup will simply allow you to get started by configuring your HMD and Controller(s).

Advance Setup will do what I mentioned before, plus also allow you to set up dynamic objects. I recommend just doing a Quick setup to get started.

See Images below as a reference of how my quick setup looks when using Meta XR packages, also known as Oculus Integration.

One of the last steps in the Cognitive3D setup will be to upload the Scene Geometry. Once this is completed, you will be able to go back to the Cognitive3D portal and review the scene using the Scene Viewer.

Hit Play in Unity and record a few sessions by playing your XR experience.

After this point, you will be able to go to the Cognitive3D portal and review your player(s)/user(s) data. Let’s look at what information is available in the next section.

What Data Is Available With Cognitive3D?

There is a section I didn’t talk much about in my latest Cognitive3D video, but it is very important, and I'd like to mention it today. Cognitive3D provides something called “Spatial Optimization,” which offers you a few key metrics about your XR experience:

Spatial Insights

Comfort Insights: This score reflects how comfortable the experience is for the participants. It is a blend of frame rate, controller ergonomics, and headset orientation.

Presence: This score reflects how immersed participants are in the app experience. Higher numbers mean better presence.

Sitting & Standing: This tracks % of the time your users spend while sitting vs standing.

Additionally, you also have access to Headset Orientation & Controller Ergonomics Visualizations.

Below, you can look at a few example visualizations found in the Cognitive3D portal…

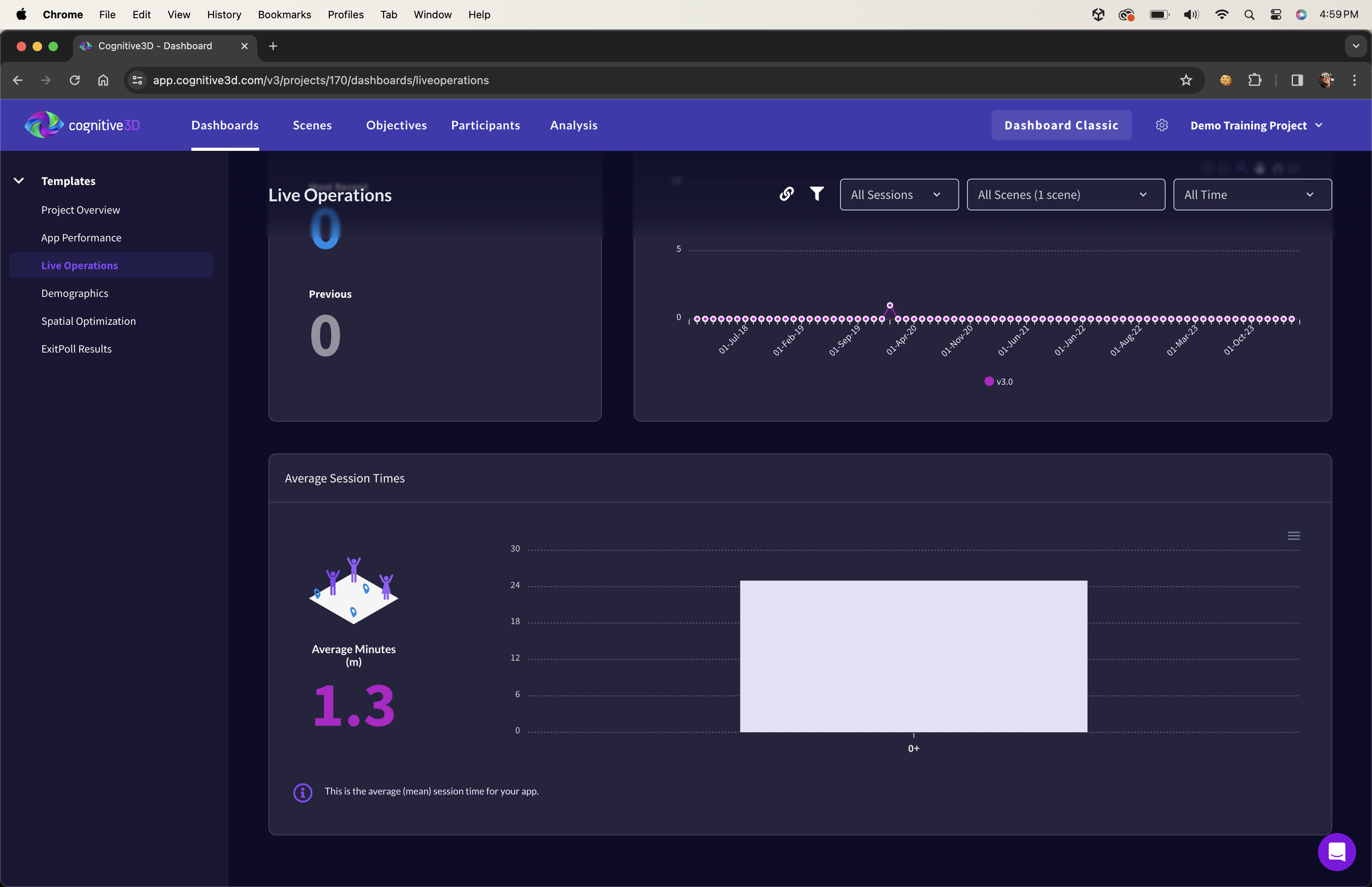

Additionally, you also have access to App Performance, Live Operations, Demographics, and Project Overview (which includes Total Sessions, Session Duration, Average Session By Scene, Comfort Score, Presence Score, and additional visualizations). See the images below demonstrating some of these metrics:

Another area that I also found super helpful was Custom Events. This feature allows you to send information from your XR experience at any time you find appropriate. For instance, in my VR training simulation, I ended up sending a custom event for each piece of clothing equipment needed before users enter an engine generator room where specific clothing is required for safety reasons. Additionally, I sent a second custom event whenever users put on all the equipment pieces required. This allowed me to find out who was following the safety clothing regulations and who wasn’t. Below, you can see some of the data generated by these custom events.

This is all very helpful data so far, right? But how do you go about defining a custom event? Let me show you some code for instances where I send a custom event each time someone grabs a piece of clothing versus when they’re fully equipped with all required clothing.

[RequireComponent(typeof(Cognitive3D.DynamicObject))]

public class AnalyticsEventWrapper : MonoBehaviour

{

[SerializableField]

private GameManager gameManager;

private InteractableUnityEventWrapper eventWrapper;

private Cognitive3D.DynamicObject dynamicObject;

void Start()

{

eventWrapper = GetComponent<InteractableUnityEventWrapper>();

dynamicObject = GetComponent<Cognitive3D.DynamicObject>();

eventWrapper.WhenSelect.AddListener(() =>

{

new Cognitive3D.CustomEvent($"User Manually Equipped with {gameObject.name}").SetDynamicObject(dynamicObject).Send();

if(gameManager.isFullyEquipped)

{

string payload = $"User is now fully equipped";

new Cognitive3D.CustomEvent(payload)

.SetProperty($"User Position", playerTransform.position)

.SetProperty($"User Equipment Pieces", piecesEquipped)

.SetProperty($"User Time To Get All Equipment", Time.realtimeSinceStartup)

.Send();

}

});

}

}So What Do I think About Cognitive3D? Is It Worth It?

Cognitive3D Walkthrough Video Available Here

You may think that I might be a bit biased since I was sponsored to make the Cognitive3D video, and that’s fair. However, this article is NOT sponsored, which is why I decided to write about it some more and not just do a tutorial, but instead share my own experience.

So far, I've shown you a few concepts, some of the data available, some great visualizations, and even some of the code. But how was my experience when working with their tools? Was it easy to integrate Cognitive3D? Was their system up and running at all times? Did I find any issues? Do I recommend them?

To be honest, I loved their tools. I see huge potential, and as it stands, it will help you measure your users' behaviors like no other tool available today. I mean, you can replay user sessions, track when they put their HMDs on & off, controllers on & off, and basically know everything they’re doing, including how much time they spend looking at specific objects. You can even get a precise representation of it through heatmaps and other powerful visualizations. So, what’s not to like, right?

Well, let me answer the previous questions:

Was it easy to integrate Cognitive3D?

Absolutely, I had 0 issues during the integration, and the process was very straightforward. If you’ve used third-party Unity packages before, then this will feel just like being at home. Also, mapping HMDs, Controllers, & configuring dynamic objects was very easy to do.

Was their system up and running at all times? Did I find any issues?

Yes, it was. I never had an instance where they were not running. However, there were a few instances where their system took longer to load. I noticed delays when trying to replay more than 3-5 sessions concurrently through the scene explorer, but realistically, this is not something you would do all the time. I also encountered a few UI issues, but nothing that prevented me from accessing the data I needed.

Do I recommend Cognitive3D?

This is going to be a hard choice…should I? I am kidding. I absolutely recommend them. I don’t know of a single tool today that can provide you with so many actionable insights, insights that can help us improve our apps and games, insights that will allow you to keep enhancing your XR experience based on critical insights.

Well, I wish I had more time to keep writing about their tools. There’s just so much more to cover, but I will leave that for a future post. If you have any questions, please let me know in the comments below.

Thanks, everyone, and keep making great XR stuff!

Dilmer