AR Foundation With Meta Quest Support Is Here! (Unity Setup & Demos)

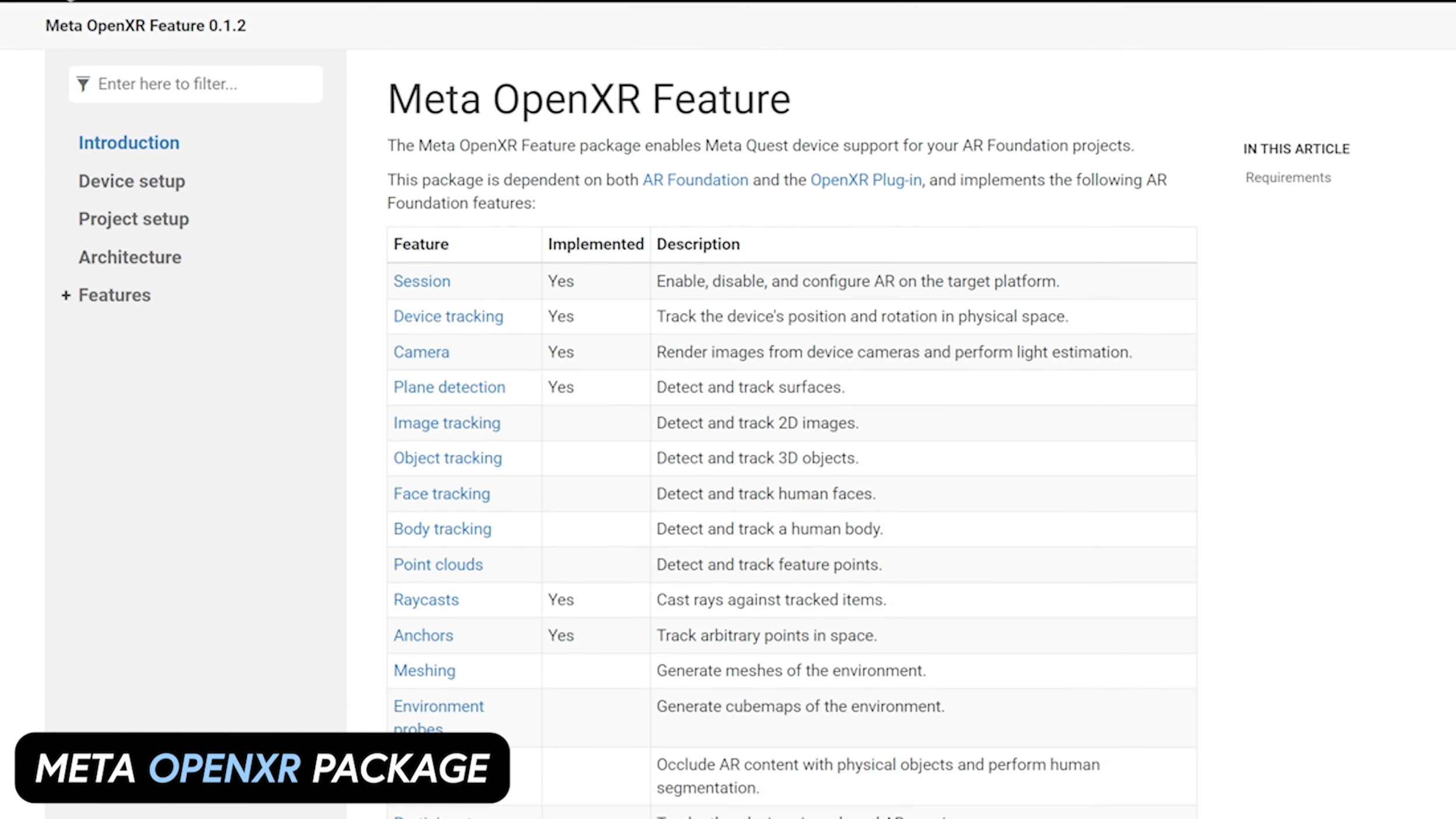

Today, I am very excited to talk to you about all the NEW AR Foundation features that Unity has added for Meta Quest devices. These features are provided through an experimental package called the Meta OpenXR package, which currently implements the following AR Foundation features:

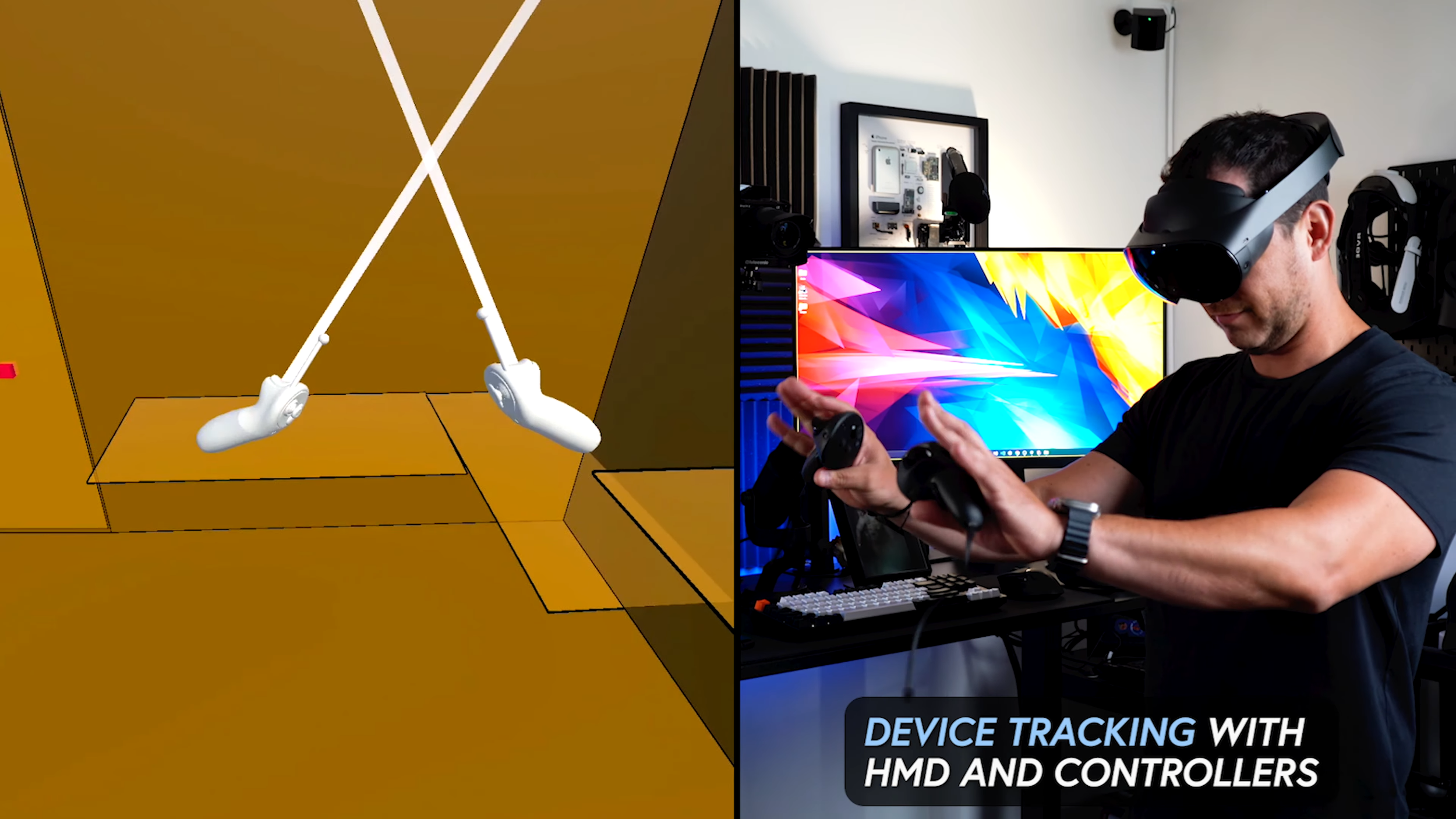

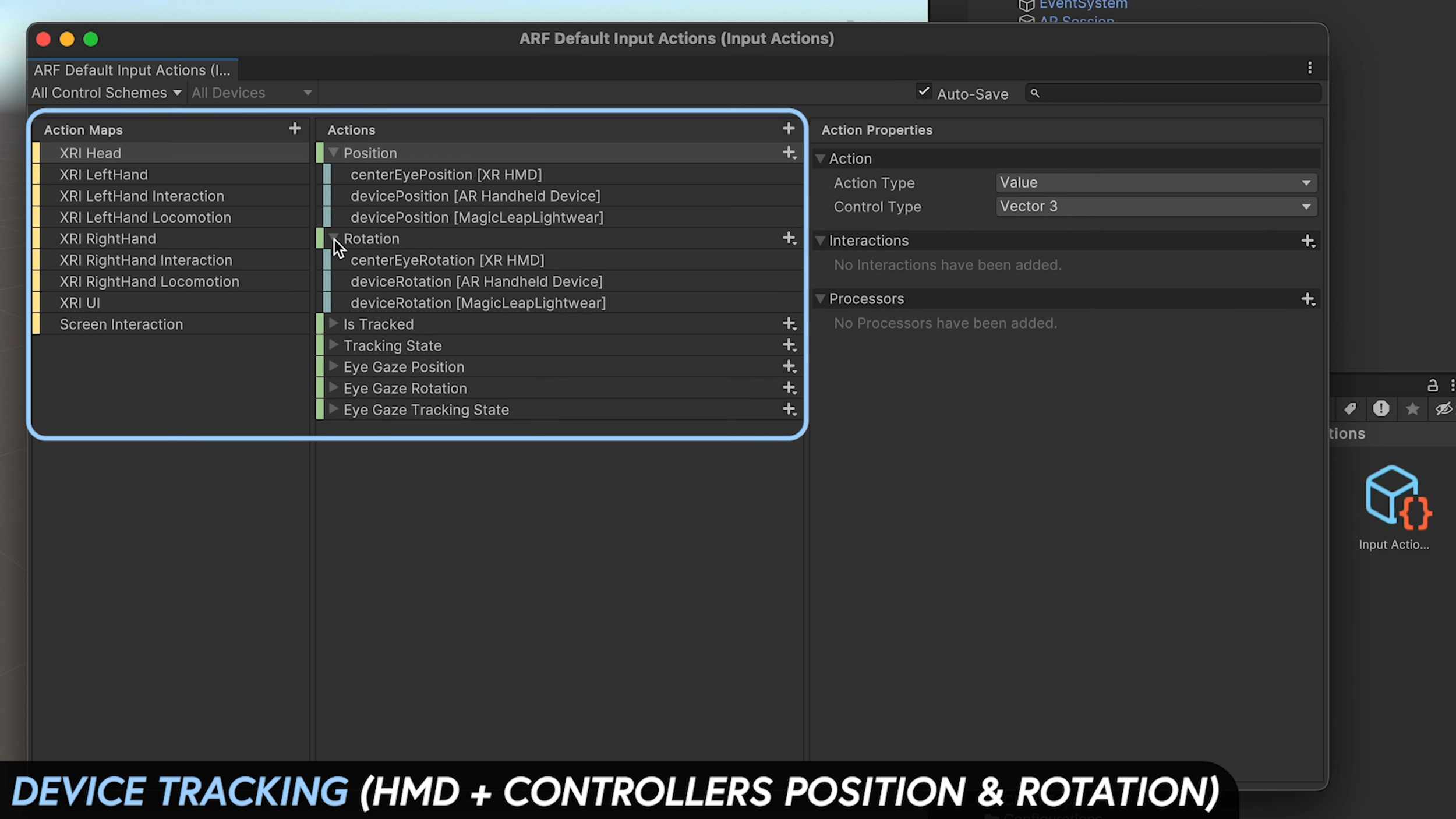

Device Tracking (HDM + Controllers)

Camera component (for Passthrough features)

Plane Detection

Plane Classification

Raycasts

Anchors

First, if you are not familiar with AR Foundation that’s ok, let me explain What you can do with these powerful features today:

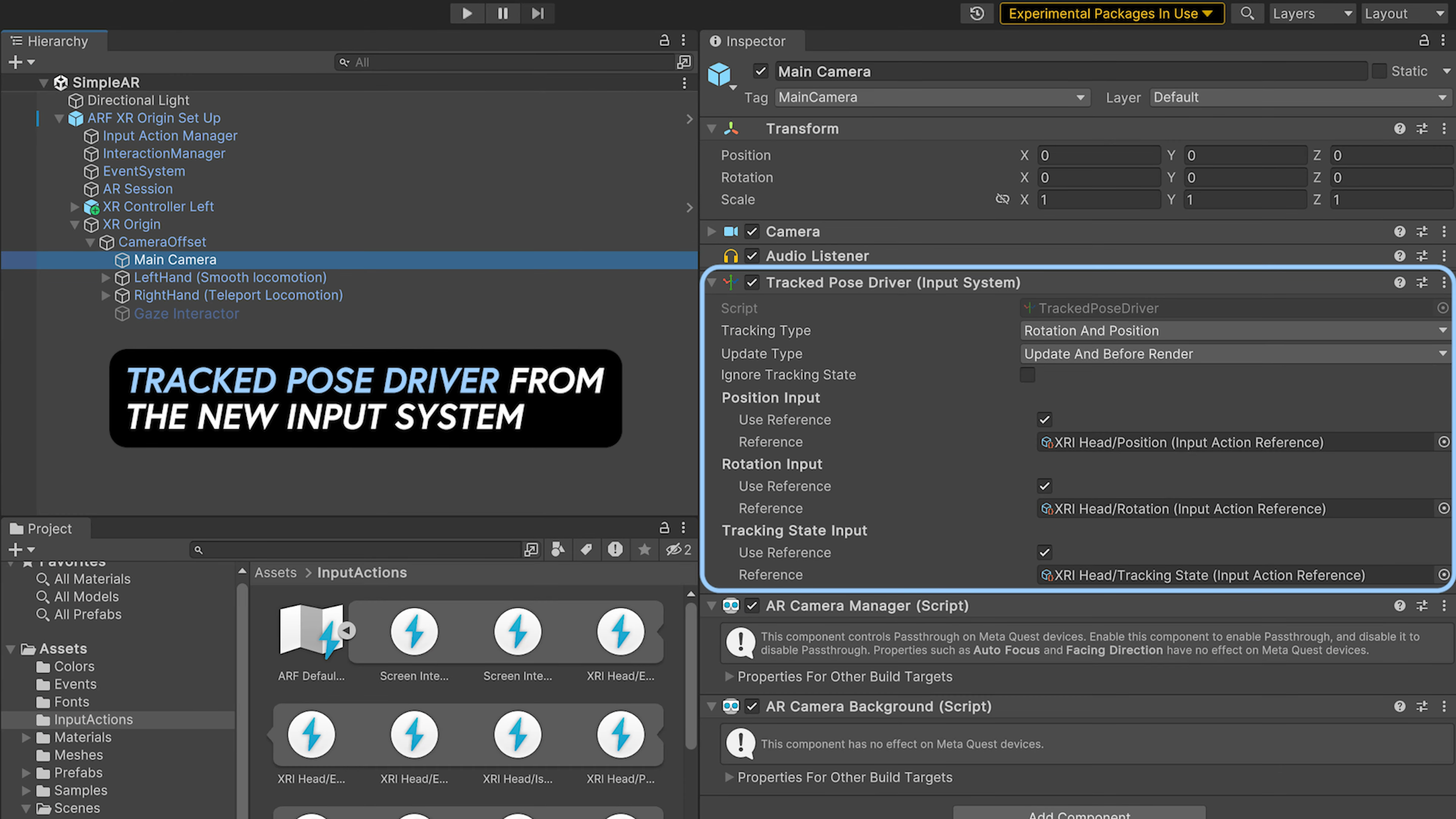

With the Device Tracking feature, you can obtain the position and rotation of the headset, as well as the position and rotation of the controller(s), using a component called Tracked Pose Driver from the new input system or through the new input system input bindings for XR.

The Camera feature allows you to access the camera from Meta Quest devices for passthrough functionality. Here, you can toggle Passthrough on and off.

The Plane Detection and Plane Classification features work differently from ARKit or ARCore. However, they provide you with plane data captured during Meta Room Setup. If you have already done a Room Setup, you are all set. Otherwise, go to Settings > Experimental > Room Setup and select the Setup button from your Quest Operating System. Once this is done, try using the AR Plane Manager + Plane Visualizers, and you will be able to see rendered planes. Plane classification is also possible because Meta provides a similar feature called the "Semantic Label Component," which you tag during room setup. The Meta OpenXR package translates those labels for you into the standard AR Plane classifications available in AR Foundation.

The Raycasts feature is also supported, allowing you to determine where a ray (defined by an origin and direction) intersects with a trackable. This is well demonstrated in the SimpleAR demo provided by Unity, which has been updated to use these new features. I will walk you through that demo today.

Lastly, the Anchors feature also works with this new package. Anchors allow you to add a specific point in space that you want the device to track. The device typically performs additional work to update the position and orientation of the anchor throughout its lifetime.

Take a look at the images below, which illustrate some of the Meta OpenXR Package features previously mentioned.

Setup AR Foundation With Meta OpenXR In Unity

First let’s make sure you take note of the following requirements:

Unity 2021.3 or newer

AR Foundation 5.1.0-pre.6 or newer

OpenXR 1.7 or newer

Don’t worry too much about the last 2 requirements because we will add meta OpenXR package which will automatically download all the required dependencies.

Now let’s clone this https://github.com/Unity-Technologies/arfoundation-samples/ and adjust a few things to make all of these features work:

Open the project

In Unity > Package Manager > + Package by Name: com.unity.xr.meta-openxr

Next go to let’s change a few settings:

Go to File > Build Settings and switch the platform to Android

Set the Texture Compression to ASTC

Go to Player Settings > In XR Plug-in Management > Android > Enable OpenXR + Meta Quest Feature Group (Fix all errors and warnings)

Go to OpenXR under XR Plug-in Management and under Interaction Profiles add “Oculus Touch Controller Profile”.

Go to Player on the left pane and click on the android tab, here we will verify or change the following settings:

Color Space = Linear

OpenGL ES 3.0 as our graphics API

Minimum API Level will be set to 29

Scripting Backend ILCPP

ARM64 as our target architecture

Lastly, make sure Install Location is set to Automatic

AR Foundation Samples

There is an amazing list of examples available in this Unity Repository, where one scene has been updated to work with the Meta OpenXR package, and another one that we will modify today.

SimpleAR: this will help you get plane detection, plane classifications, and raycasts features tested. To test Plane Classifications use “AR Plane Classification Visualizer” within the XROrigin AR Plane Manager component instead of “AR Plane Debug Visualizer” within the AR Plane Manager

To test Camera Passthrough features simply toggle the “AR Camera Manager” on and off, or add my script: TogglePassthrough.cs which will require you to map to an input action property, in my case I am using primaryButton for the left and right controllers (steps are shown on my YouTube video). This script is fairly simple, we will use the trigger button and capture its action through this script, once it executes we then get a reference from the AR Camera Manager and toggle by using a ! (NOT) operator on the enabled AR Camera Manager property.

Anchors: we need to make a few tweaks to the “Anchors” scene, perhaps let’s copy the Anchors.unity scene and call the new one “AnchorsV2.unity”

On the LeftHand Controller and RightHand Controller set all controller presets

Enable XRInteractorVisual on both controllers

Main Camera Alpha = 0

Remove AnchorCreator from “XR Origin” and add AnchorCreatorV2.cs & associate TriAxesWithDebugText

But what changes were made on AnchorCreatorV2.cs vs AnchorCreator.cs?

These changes were very similar changes to what Unity made with the new SimpleAR scene:

I added an InputActionReferences serializable field to pass in the actions executed from the controllers.

We need a reference to the trackable type which we will use during raycasting against the planes.

During OnEnable and OnDisable Unity lifecycle methods I added listeners to trigger buttons which will allow us to determine when to place an anchor

Upon Trigger Button executions we create a new pose from the controller's position and rotation, then these values are passed into RaycastFromHandPose method to determine if we hit a trackable, if so then an anchor is generated at the hit pose. (Note: Unity will be updating this Anchors.unity scene but for now this is just an example to show you how this could be achieved for the Meta Quest 2 or Quest Pro which should help you with your own scenes)

Lastly, to run any of these two scenes just make sure SimpleAR or Anchors are the only ones added in Build Settings.

Well, that wraps up the new AR Foundation support added for Meta Quest devices. If you have any questions about anything mentioned, feel free to drop a comment, subscribe, and hit the notification bell. This will help me continue to bring you a lot more content going forward.

Thank you very much everyone!

Dilmer