How to Use Meta Voice SDK to Improve VR And MR User Interactions ?

Over the last few days I have been running some experiments with Meta’s Voice SDK. This AI tool is very cool and I decided to do this because one of the features I plan to add to my Unity ChatGPT demos is voice - yes voice will be added to be able to press a button on my VR controller which will initialize voice recording, communicate with Wit.AI Meta Voice SDK, and lastly send the generated transcriptions to ChatGPT API for source code generation.

So How can you configure Voice SDK ?

In my case I used specific requirements which I am going to outline below, I recommend using the same process and versions to make sure your experience goes as smooth as it went when I created a new Unity project with Meta Voice SDK which is also currently available through Patreon and the requirements are as follows:

Create a new project or use an existing project with Unity 2022.2.1f1 (or greater is recommended)

Create a new account and application from Wit.AI

Download & Import the Oculus Integration which includes Voice SDK from here (after importing you will find a folder added to Unity > Assets > Oculus > Voice which is what includes all of the Voice SDK components)

Copy and paste the Server Access Token from Wit.AI [App] > Management > Settings to Unity > Assets > Resources > VoiceSDK

Make sure your Unity Project Build Setting have the following changes:

Set platform to Android Platform

Player Settings > Android > Set Scripting Backend IL2CPP

Player Settings > Android > Set Target Architecture ARM64

Player Settings > Android > Set Android API min level (API Level 26) or greater

Player Settings > Android > Set Internet Access as required

There are many other requirements to deploy your app and get your Meta Voice SDK demo built, if you are curious about this process then watch this video which will walk you through how to setup your Meta Quest, Meta Quest 2, or Meta Quest Pro for development and ultimately allow you to deploy an Unity experience to your headset. Also if for some reason you Meta Voice SDK features are not working use this resource to double check your Unity project settings.

Wit.AI Intents, Entities, and AI Training

Why Wit.AI ? this tool is what powers Meta’s Voice SDK, basically Unity is communicating Wit.AI when recording audio through our microphone and while using Meta’s Voice SDK, Wit.AI is the server side software which uses AI to make sense of what we’re describing and in a way categorizes our speech or what they call “Utterances” into intents, entities, or traits. So let me give you an example of how this works from what I know as of today which by no means I consider myself an expert on this topic but someone who is consistently learning new tech.

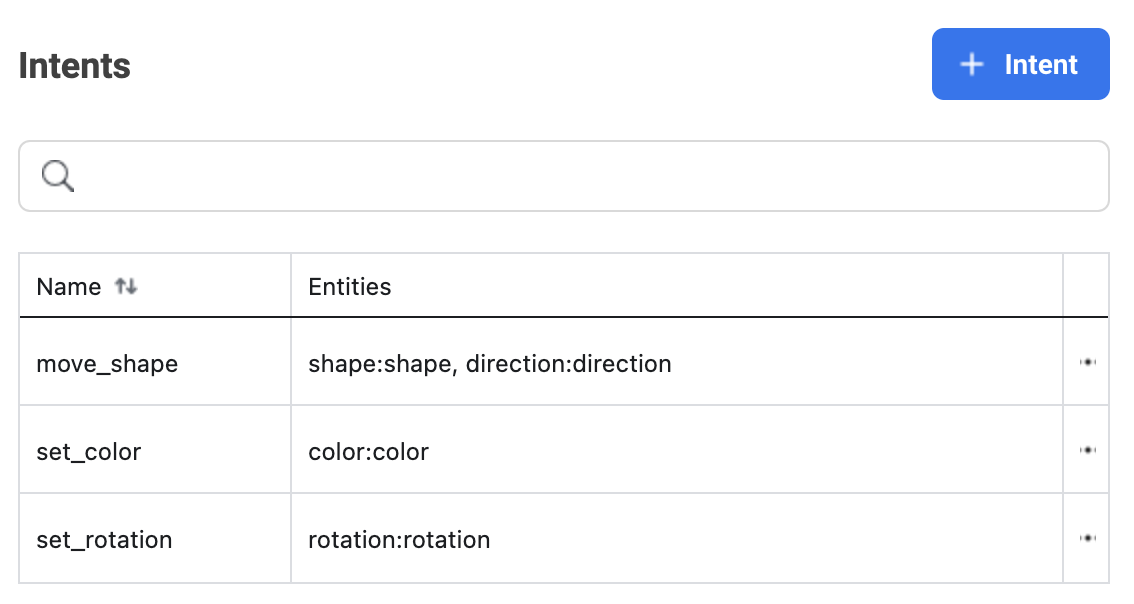

So let me explain what I did, say we wanted to do a few basic actions and control each action with our voice, for instance let’s look at the following examples in which each will serve as its own intent.

Examples:

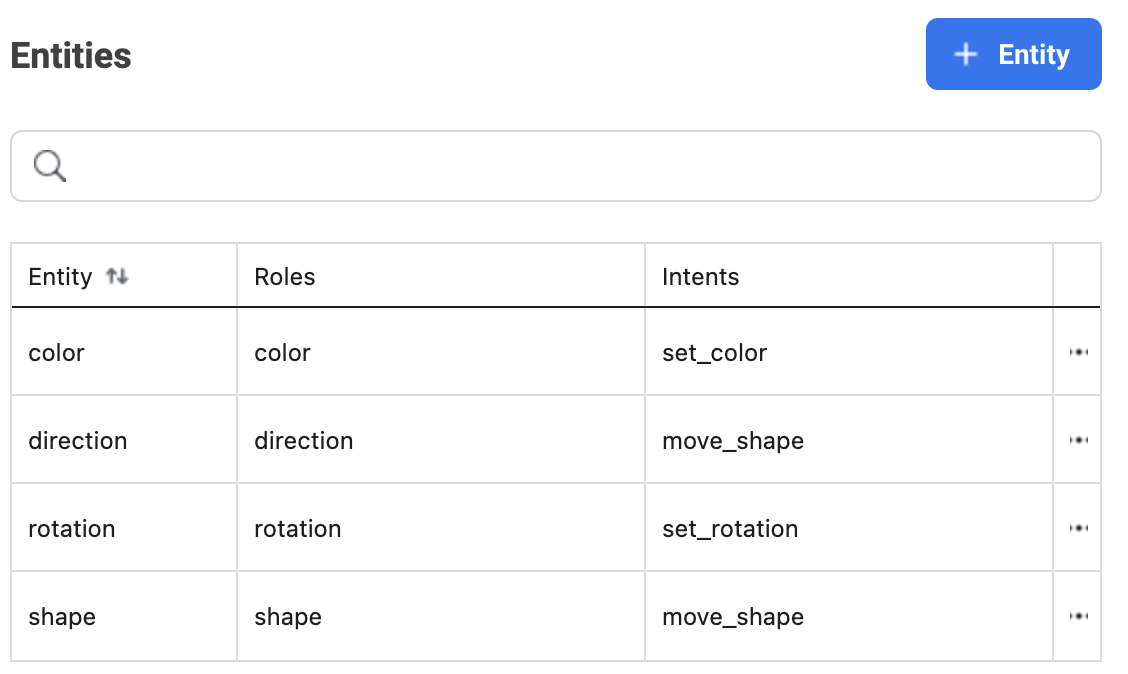

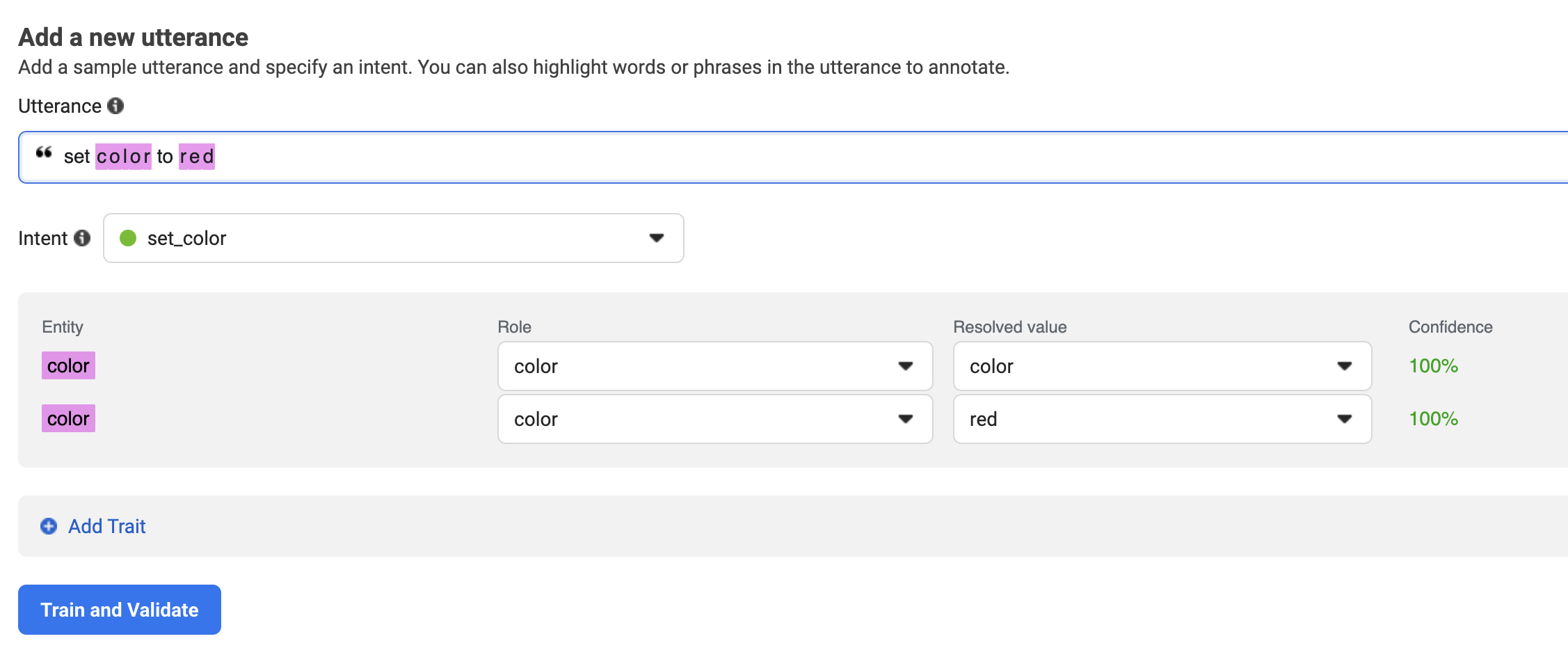

Example 1 - Changing the color of 3D Objects in a scene, I want to be able to say something like “set to blue”, “set as blue”, “set blue”, “change color to blue” or similar and then have that mapped to a C# method which I can then use to apply a material change through code. To set this up we can go to Wit.AI > Our App and add a new “Utterance” such as “set to blue”, then “blue” can be highlighted to create an entity, we can also add a bunch of colors to the color entity and train the Intent to have a lot of variations by talking through the mic and asking various actions similar to what our “Utterance” was originally.

Example 2 - Similar as above but to change 3D Objects rotation in a scene", but in this case we will use an “Utterance” of “set rotation”, “set object rotation”, or something similar. In this case the entity is rotation because I want to control how much rotation an object could’ve in the Y axis.

Example 3 - In this case I wanted to add 2 entities to an “Utterance” so instead of just one entity as we did with color and rotation I want to have 2, so say I want to move an object and also set the direction well in this case our “Utterance” will be set as “set cube forward” or “change cube position forward”, in this case the entities will be shape and direction given that I want to control a cube or a cylinder and also set the direction to be (forward, backward, left, right, up, and down).

So how does this look in Wit.AI ?

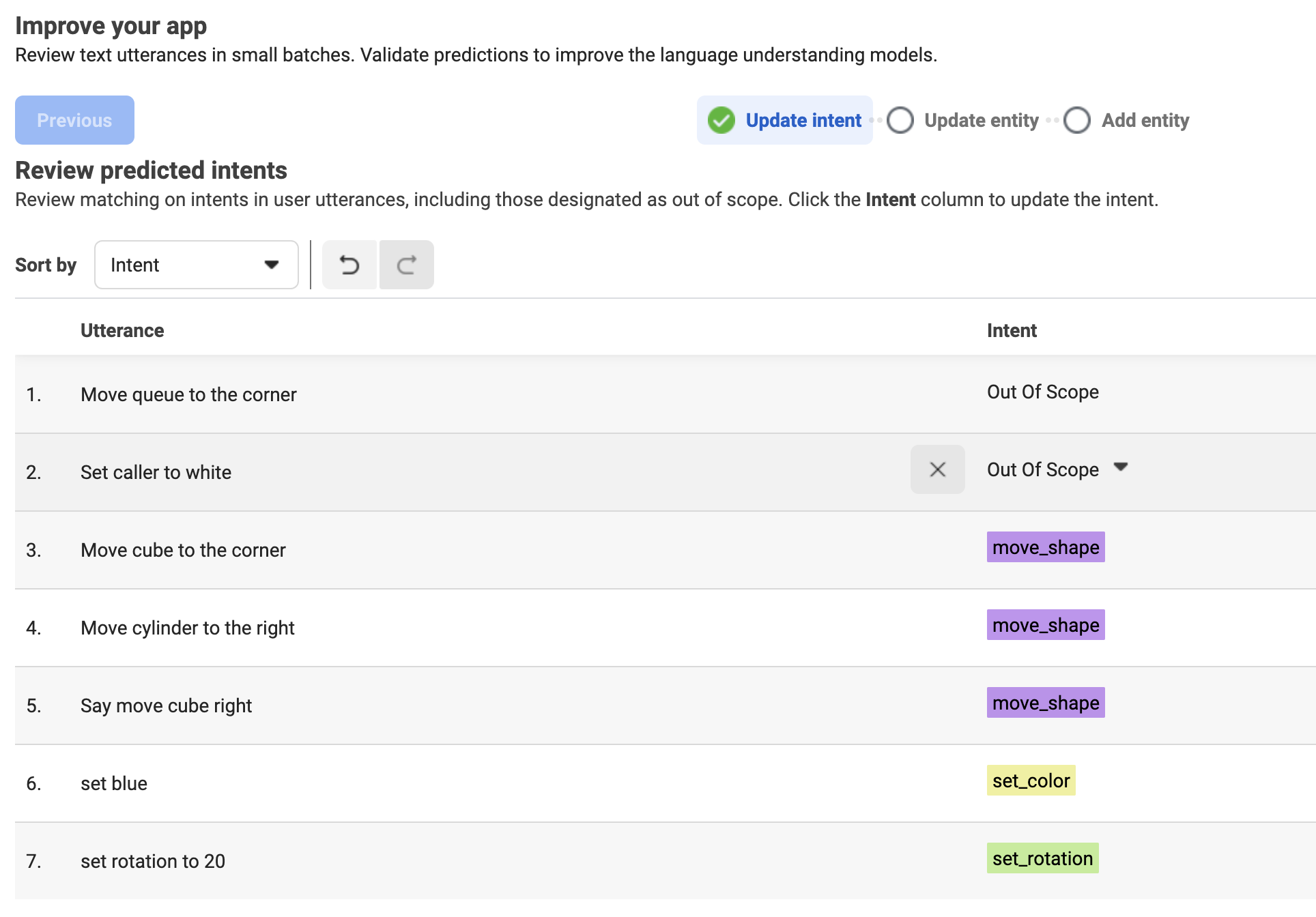

Here’re a few images to show you how I was able to setup examples 1-3 without much work and a few settings.

Unity Project Intent Mappings

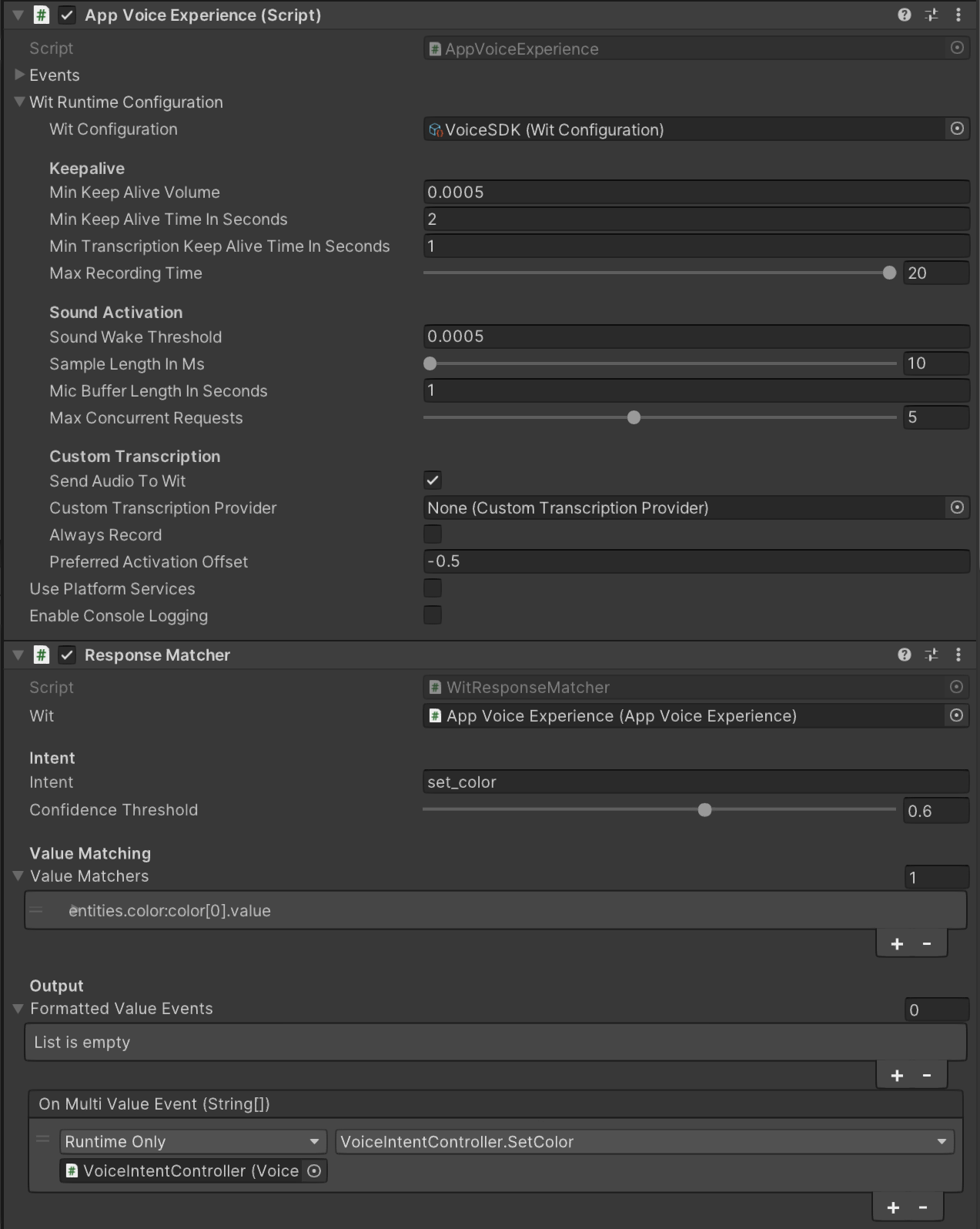

The Unity part was very fun to work on and a lot simpler than I thought as well, Meta Voice SDK comes with a few handy tools to configure Meta’s Voice SDK from communicating to Wit.AI to mapping your intents to our code. So how do we do this?

Let me give you an overview and you can look at the details by watching my video

Previously you added the Server Access Token which should get you setup with Unity > Wit.AI communication

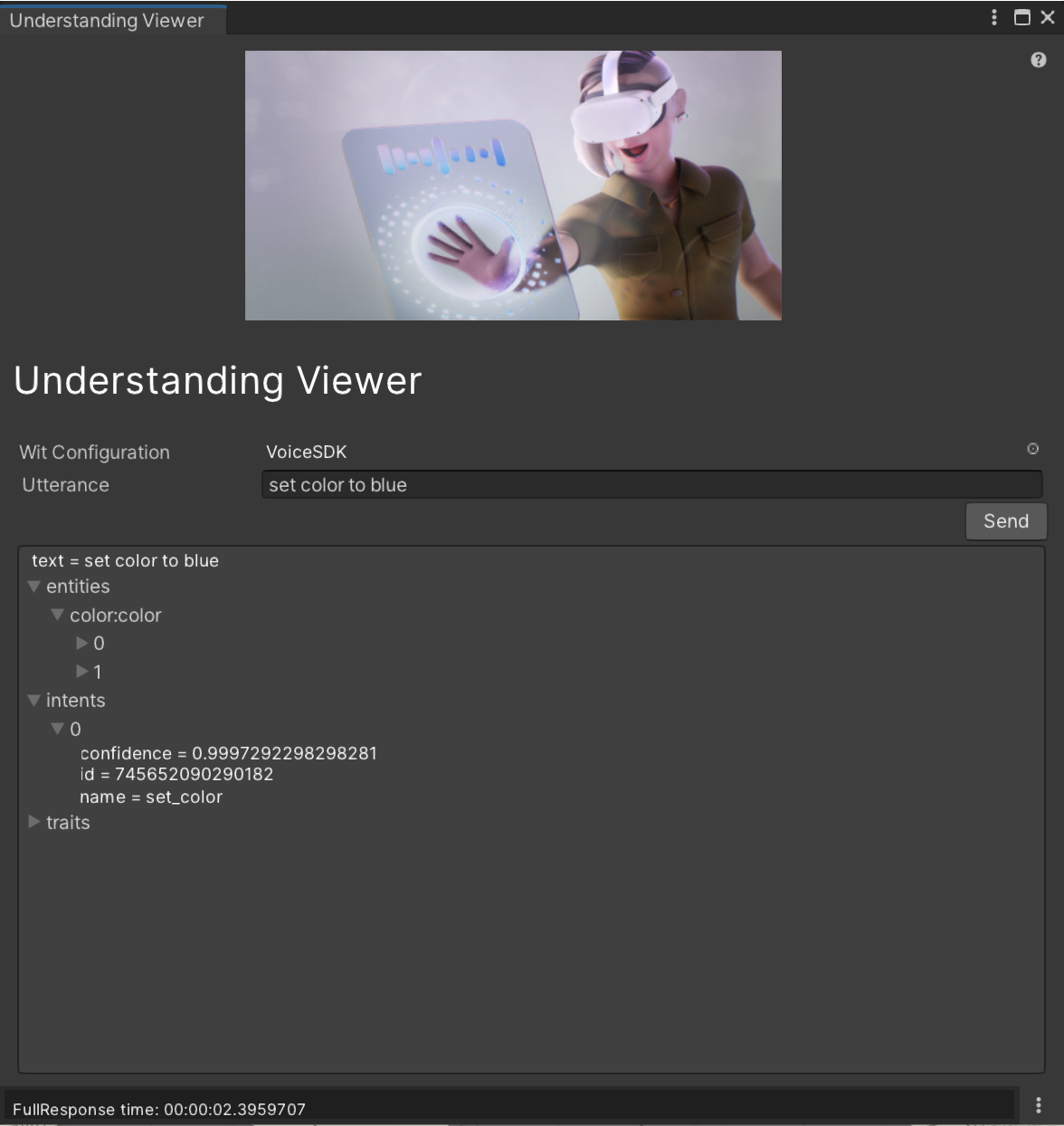

Now you need somehow tell Wit.AI what Intents are mapped to, also entities and traits (if you’ve any), well to do this you go to Unity > Oculus > Voice SDK > Understanding Viewer

Now we know which “Utterances” we’ve created, so we could type “set color to blue” or “move cylinder up” which should return intents, entities, or traits matching the “Utterance” which Wit.AI evaluated, see Fig 1.0 and 1.1

To map our intents to our C# methods you could simply have a MonoBehaviour which implements a method in which argument is an array of strings as shown on Fig 1.2 and 1.3

Notes: There are also other ways to map to C# methods by using Meta’s Voice SDK specific attributes, currently I am not doing it that way but I did get a few comments on YouTube regarding that functionality which I will cover in the near future. Also when sending rotation values there ways to ensure numeric values are extracted from “Utterance” currently I did this as basic as possible but it can be improved.

Testing

To test this I ended up just using Unity Play mode with a Windows PC and you will need a mic as the “App Voice Experience” script will use your operating system default mic to record any audio. Also the Voice SDK demo I created provides you with bindings to full and partial transcript events which allows you to see if your mic is working or not and you will be able to see the values either on the UI or during a debug session as each transcript event response returns successfully.

Well there YOU’VE IT, my first Unity Voice experience where I can control 3D objects with my voice, honestly this is very basic but hopefully you can see the huge potential and huge amount of use cases that could be solved by using this technology. So for my upcoming videos with Voice I plan to integrate this with my ChatGPT video series which you can watch today from this playlist

Thank you !