NEW Unity AI Tools - Unity Muse And Unity Sentis Announced!

Today, I would like to talk about Unity AI and AI as a whole as it relates to Unity, as well as share my own experience.

As you all know, AI development has been accelerating rapidly. It's mind-boggling to me how new AI models are emerging every day, making it incredibly challenging to keep up. In my opinion, it can be overwhelming. We now have AI models like ChatGPT with various versions for detailed conversations, Dalle2, Stable Diffusion, Midjourney for art generation, and many others.

Today, Unity is also stepping into the AI game. Unity AI is expanding its toolset, and while many might say that Unity is just jumping on the AI bandwagon, I will explain today why that isn't entirely true.

Unity is introducing two new products to their Unity AI ecosystem: Unity Muse and Unity Sentis, both of which showcase what truly makes AI useful with productivity tools built into the Unity editor.

The images below reflect some of the Unity AI tools coming to the Unity Editor very soon!

Impressive technology I know, well let’s talk about both products:

The First product Is Unity Muse: Unity describes this as “An expansive platform for AI-drive assistance during creation” which I can describe it as a ChatGPT Unity version in which results from an intelligent neural network are used for specific Unity use cases, so with this platform they are launching a few use cases (or what I see as multiple sub applications within Unity Muse)

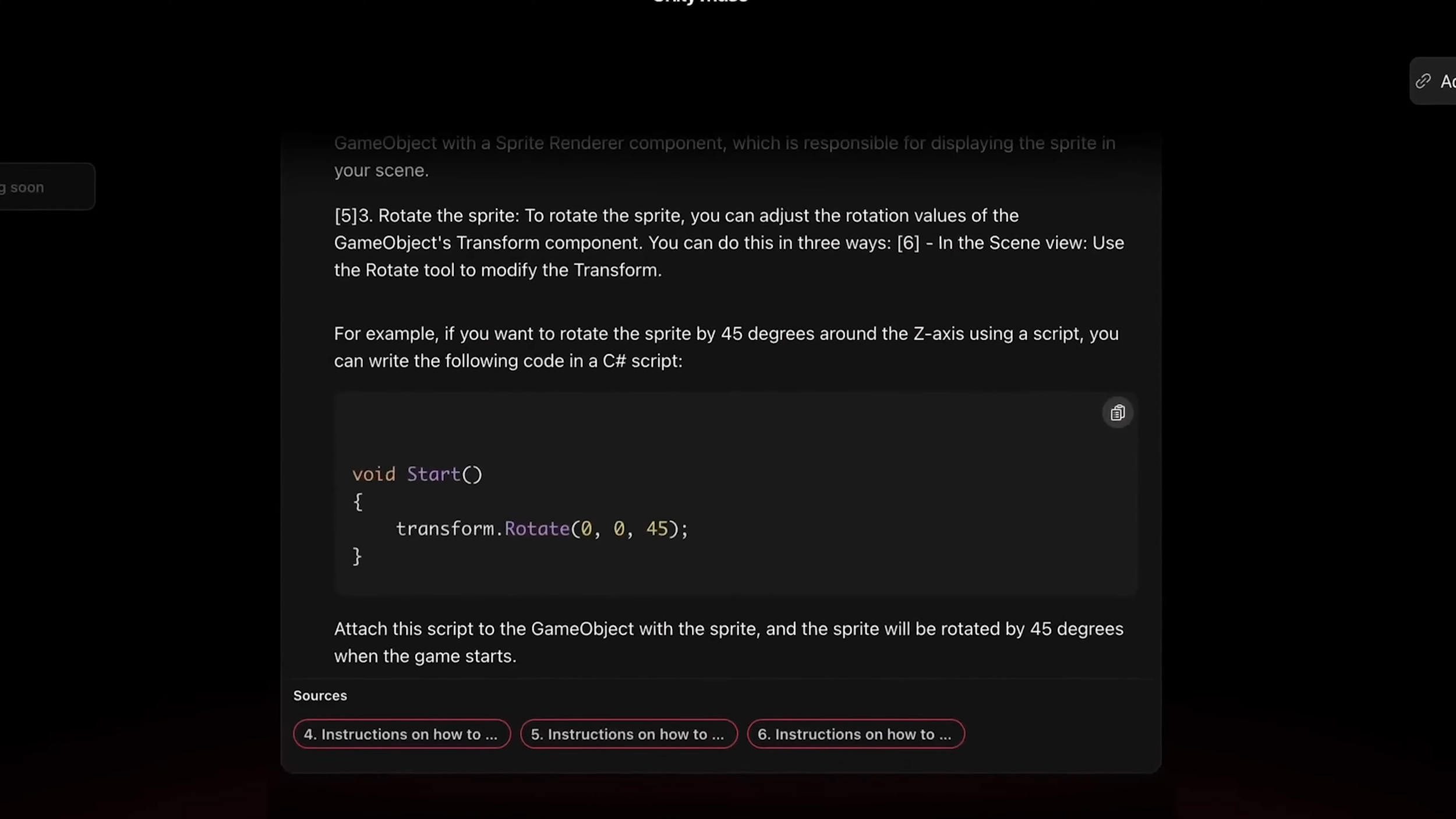

The First Sub Application is called Muse Chat: which uses trained data from Unity docs, training resources, and literally all data Unity publicly provides to help creators with development. This means we can get very specific Unity answers including scripts without having to have Stackoverflow or a chrome tab open with ChatGPT.

Unity also mentions that to create MuseChat they licensed third party LLM (referred to as Large Language Model) and integrated them with Unity data. “Sylvio Drouin” in Twitter also mentioned what I suspected Unity to be doing; which is to be using Embeddings with ChatGPT 4 by feeding it with Unity docs, training resources, and public data.

The Second Sub Application is called Muse Animations: which Unity didn’t announce with its closed beta but I saw it in the Unity Muse announcement, it allows you to provide prompts for specific animation requirements, for instance we could say “Create a Character Jump”,”Walk”,”Fighting” animations and this tool will do it for us. Similarly to how I achieved this during my ChatGPT prototyping phases where I did a prototype like MuseChat and MuseAnimations early on when ChatGPT was first released.

I also read additional features that are coming to Unity Muse: such as bringing textures and sprites generation into its workflow, which I’ve a bit of a concern to be honest, I am still having a hard time with this AI feature in general due to ethical concerns, there are too many copyright issues around generative art but I am looking forward to see how Unity solves that problem.

Ultimately, Unity wants to allow us to have the Unity Editor with superpowers, where we use text prompts or sketches to help us with our development process.

The Second product Unity announced is Unity Sentis, which allows you to:

Embed neural networks in your builds

It also runs nearly everywhere where Unity runs which you certainly know is nearly in most platforms.

I believe this will be very similar to how ML-Agents runs today.

So your main question may be, is Unity really new to AI?

Unity has been doing AI for a long time and they’ve been doing it very very well. It’s just not as widely adopted like ChatGPT is because YES you need to be somewhat technical to use their ML tools.

For example ML-Agents as mentioned earlier (had a first version released Sep 18, 2017 so that is 5-6 years since its released.

ML-Agents is a tool that I love because it allows you to use the Unity Editor to train, simulate, and embed Machine Learning models which literally can run everywhere by using the power of Barracuda behind the scenes. The Barracuda package is a lightweight cross-platform neural network inference library for Unity which allows you to embed neural network models from Pytorch, TensorFlow, and Keras. If you’re curious, take a look at my ML-Agents videos above that I made previously to get a sneak peak of what can be done with ML-Agents.

Unity Perception 1.0: is also another impressive tool made by using, the Perception package provides a toolkit for generating large-scale datasets for computer vision training and validation.

Unity PySOLO: is a new open-source python package that provides utilities to analyze and visualize data in the new SOLO format.

Unity Synthetic Homes: is a dataset generator and accompanying large-scale dataset of photorealistic randomized home interiors, built for training computer vision models such as object detection, scene understanding, and monocular depth estimation.

Unity Synthetic Humans: this package gives you the ability to procedurally generate and realistically place diverse groups of synthetic humans in your Unity Computer Vision projects.

Well, I hope this helped you understand where Unity stands and what Unity is building going forward with AI. I personally have a few concerns which I am sure you do as well, let me know what you think about these upcoming tools? How do you think Unity will solve ethical concerns about generative art? What tool are you looking to use going forward? Comment below and once again thank you for your time and safe and happy coding!